Table of Contents

ToggleTypes of machine learning algorithms with examples

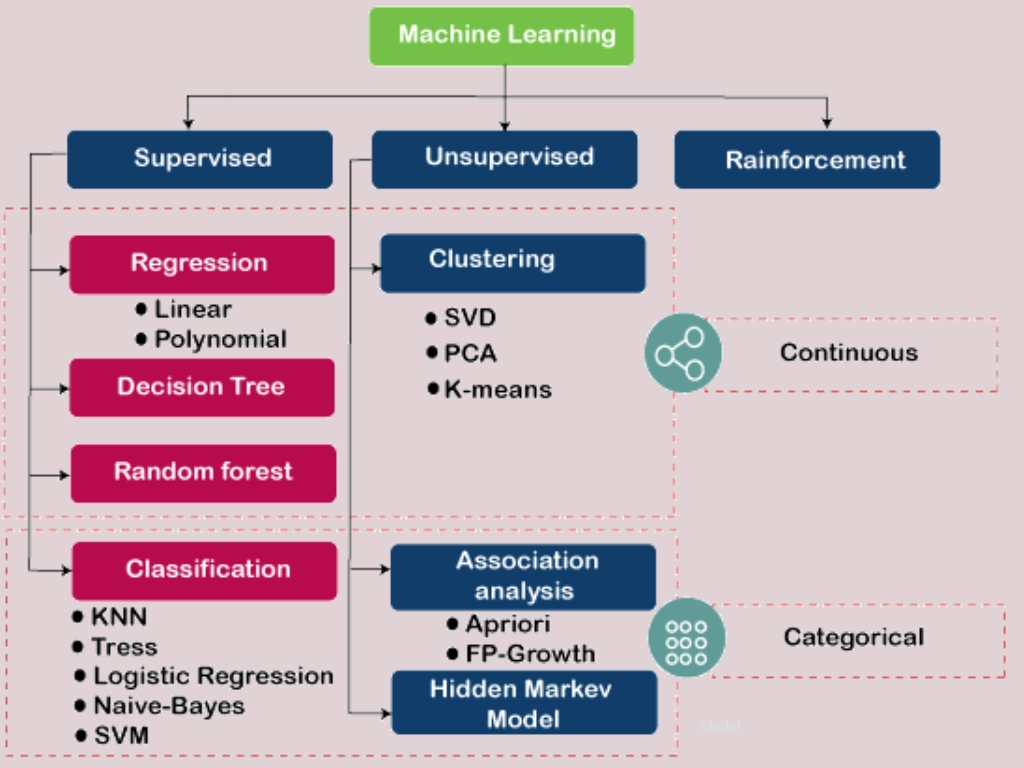

Machine learning algorithms can be used for various data types and learning problems. These algorithms classify the outcomes as cluster data points; even without prior labels, they produce the main component for contemporary AI systems. The article will provide examples of various machine learning algorithms and explain their functionality and practical applications.

- Unsupervised learning algorithms, such as the K-means clustering algorithm, allow us to identify patterns in data without already allocating labels to each.

- External supervised learning algorithms, such as linear regression and decision trees, are employed when the data labels are known.

- Reinforcement learning shows that systems like Q-learning learn about their behavior and determine what works best by doing it repeatedly.

- Ensemble and deep learning algorithms effectively improve the model’s prediction accuracy and robustness, respectively.

K-Means Clustering is a common technique for dividing data into K different, clearly separated clusters in K distinct, non-overlapping clusters. This technique assigns each data point in a larger group or small cluster to one of these clusters to vary the distances between the data points and the cluster’s centroid, which is the mean, to achieve the minimal values. Applications of K-Means in the general solution of market segmentation, document clustering, and image segmentation problems.

Hierarchical Clustering

This algorithm takes a tree view, inside which each node is a cluster of daughter clusters. Strategies for hierarchical clustering generally fall into two types: accumulative, also called bottom-up, and divisive, which is top-down. Clustering is undertaken using an agglomerative process where each observation starts in its cluster, and then pairs are merged as one moves upward on the hierarchy.

Principal Component Analysis (PCA)

PCA is a statistical process that highlights prominent trends and reduces the data to understandable patterns. It is often done to boost the capability of detecting patterns and data visualization in the data. PCA simplifies the data by reordering it into a new number of factors: principal components and orthogonal. However, principle components are most valuable in revealing the data variances.

Semi-Supervised Learning Algorithms

The advantage of semi-supervised learning is that it fills in the gap between supervised and unsupervised learning by substituting labelled and unlabeled datasets. This technique is thus especially favorable when the accessibility of a fully class-labeled dataset is extremely expensive or faces practical limitations.

Self-training

When acting as a self-taught teacher, the model trains on a small initial set of labelled data to finally extend the achievement upon itself and additional unlabeled data. The model turns to unlabeled data to make predictions and to determine data with high confidence, such as the newly labelled ones. This learning method is one of the simplest forms of semi-supervised learning. It can achieve very high effectiveness in the sizing of the training dataset virtually at almost no cost of the additional labelling.

Co-training

One kind of training is called “co-training,” which shows two models separately on different sides of the data. Another way is that one model can deal with text data and the other with number data. They then worked together as a group. Eventually, they teach each other, which makes them more accurate with the data; they see things differently from each model.

Trans ductive Support Vector Machines

Interestingly, Transductive Support Vector Machines (TSVMs), which work by not identifying data during the training process, are an addition to how SVMs are usually used. So, TSVMs try to find a decision line that not only separates the labelled data points but also keeps the unnamed data points as close together as possible, assuming that similar points will have similar labels.

Reinforcement Learning Algorithms

The idea behind reinforcement learning (RL) is similar to machine learning. In real life, an agent learns how to make the best choice by acting in a certain way to get the most rewards over time. The models are often linked to the Markov decision process, also known as the algorithm.

Q-Learning

Q-Learning is an important value-based learning function in the field of RL, which is enough to keep the field going. With a Q-table, it learns by remembering the highest gain for a particular state at different states. The agent knows more about its surroundings as it explores them, and it tries to find the most informative action-selection policy.

Deep Q Network (DQN)

Deep neural networks are used to make DQN better than Q-learning. These networks act as rough copies of the Q-value functions. In areas where data has a lot of dimensions, this method works better than Q-learning. However, it also has some problems.

Proximal Policy Optimization (PPO)

The policy-based method and on-policy and off-policy trade-offs in PPO, an RL program, help it get the best results. The one that stays the same and is the easiest to use is the gradient policy method that is already in place. PPO could also be a handy tool to help with complicated situations like controlling robots or playing video games.

- Value-based: Q-Learning, DQN

- Policy–based: PPO

Deep Learning Algorithms

Deep learning algorithms represent a subset of machine learning algorithms that are particularly powerful for tasks involving large amounts of data and complex patterns. These algorithms model high-level abstractions in data using architectures composed of multiple non-linear transformations.

Convolutional Neural Networks (CNN)

CNNs rank highly in computer vision and are even better than others in image and video recognition, classification, and medical image analysis. The construction of the CNN provides a way to encapsulate spatial layers and the underlying data through convolutional operations.

Recurrent Neural Networks (RNN)

RNNs, such as speech or text, were invented to capture the data sequence. Then, they stand out in recollecting information through memory stores over time, pausing timing for tasks like language translation and speech recognition. What is the main feature of RNNs? It is instead a loop structure so that information can be preserved.

Autoencoders

Autoencoders are used to encode unknown hidden hierarchies. The autoencoder often uses tolerability (encoding) for a given data set, which usually aims to reduce dimensionality. Autoencoders are known for their effectiveness in carrying out the roles of feature learning and abnormality detection.

Deep learning algorithms are performing parallel processing more powerfully and facilitating industry transformation by giving birth to new products and services that were previously unattainable. Using data from a large amount of unstructured data is a significant advantage for them.

Ensemble Learning Algorithms

Many different machine learning methods work together to make ensemble approaches work better. The process does a great job of eliminating mistakes that might happen in one model.

Random Forest

Random Forest is a flexible algorithm that builds multiple decision trees, which are put together to get a more accurate and consistent prediction. It is prevalent because of its simplicity, since it can cover classification and regression tasks.

Gradient-Boosting Machines (GBM)

GBM operates in a tree-building fashion where higher-level trees serve to correct mistakes made by the trees preceding them in the sequence. This technique has high accuracy in handling a range of data types and has the capability of improving predictive accuracy.

Stacking

A meta-classifier or meta-regressor combines multiple classifiers or regressors into a single voting mechanism. The meta-model learns from the outcomes of the underlying models and the additional features they introduce. We test the base models on the entire dataset. The result would be a considerable improvement in the model’s quality.

Because ensemble approaches preserve the advantages of each competing model while eliminating its disadvantages, they provide the model with excellent predictive power. By using ensemble techniques, one can improve model stability and prediction accuracy.

Dimensionality Reduction Algorithms

Data dimension reduction is the primary instrumentation in machine learning, and it simplifies the dataset by breaking down the number of input variables or features. ML algorithms‘ role in performance improvement and data visualization is essential.

t-Distributed Stochastic Neighbor Embedding (t-SNE)

This technique has been proven to be the most successful in visualizing multi-dimensional data. It does this by normalizing the similarity between the data points to joint probabilities and minimizing the Kullback-Leibler divergence between the low-dimensional embedding and the high-dimensional data.

Autoencoders for Dimensionality Reduction

Autoencoders are a kind of neural network that learns to transform the input data into a compressed form and then decode it back to its original form. This decomposition cuts the dimension of the data while the loss of information is minimized.

Feature Selection Techniques

The feature selection task filters the most relevant features used in the model development. By applying this technique, the model’s performance is improved by getting rid of auto-correlated and redundant features that are of little use to the predictability of the model.

Dimensionality Reduction Techniques, the topic we focus on on our website, is about data simplification, which is useful for further analysis. Whether you are a data scientist, researcher, or fan, our well-researched tutorials and publications are designed with the success of your algorithms in mind.

Conclusion

The primary components of artificial intelligence and machine learning methods covered above are essential for anyone wishing to explore the field. Every kind of learning, from supervised learning, which uses labeled data, to unsupervised learning, which looks for patterns in unmarked data, and reinforcement learning, which teaches algorithms to make decisions, has unique use cases and difficulty levels. Additionally, when labeled data is in short supply, hybrid approaches made up of semi-supervised and self-supervised learning might be very helpful. We may grasp the potential of machine learning (ML) to solve complex issues and its applicability in many different domains by looking at specific examples where it is investigated.

Frequently ASKED QUESTIONS

The two primary kinds of machine learning algorithms are supervised, unsupervised, semi-supervised, reinforced, deep, ensemble, and dimension reduction algorithms.

The predicting purpose of a Supervised Learning algorithm is linear regression, which is applied to predict a continuous variable.

- In supervised learning, the system learns from examples where the correct answer is available, while in unsupervised learning, the system discovers patterns and relationships without explicit guidance.

- Supervised learning uses a marked-up data set to train the model, predicting behavior based on known inputs and result pairs. Humanize: Unsupervised learning, for its part, operates on unlabeled data to search for associations and underlying structures in data that are not defined yet.

The reinforcement learning algorithm Q-Learning is illustrative in that the agent learns which decision to take by receiving encouraged signals or being penalized.

The main reason for utilizing ensemble learning models is to perform better than individual models.

Ensemble Learning the turnout of the machines leans on multiple models to handle the instability and accuracy of the prediction better than just a single model. Let’s say we have an instance of Random Forest.

Deep learning algorithms are based on multilayer neural networks that can improve their learning capacity and accuracy by recognizing visual data like images or speech sounds with increasing experience of data flow.