Generative Adversarial Networks (GANs): A Deep Dive

Generative Adversarial Networks (GANs) have revolutionized the field of generative modeling within deep learning. These powerful architectures utilize a unique approach to create new data samples that closely resemble the real data they were trained on. This document delves into the intricate details of GANs, exploring their components, functionalities, and applications.

1. Understanding the Core Concept:

Imagine a competitive game between two players: the artist and the art critic. The artist strives to create art that is indistinguishable from real masterpieces, while the critic attempts to accurately discern genuine artwork from the artist’s forgeries. This is the essence of a GAN in a nutshell.

A GAN comprises two deep neural networks:

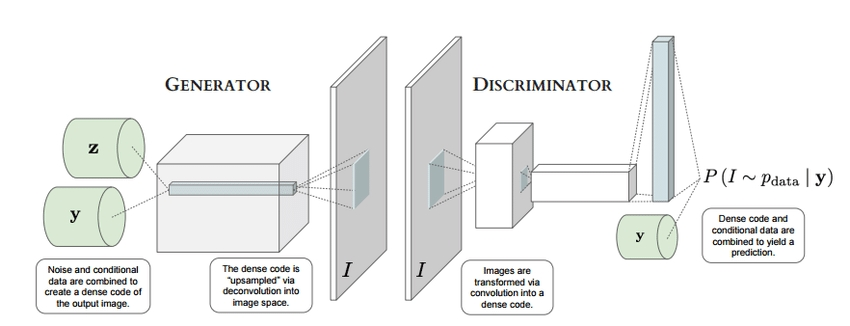

- Generator: This network acts as the artist, constantly learning and refining its ability to produce new data samples that mimic the characteristics of the training data. It typically utilizes a deep neural network architecture with multiple layers, often including convolutional layers for image generation.

- Discriminator: This network plays the role of the art critic, meticulously analyzing both real data samples and the generated samples from the generator. Its goal is to accurately distinguish between the two, classifying them as either real or fake.

2. The Adversarial Training Process:

The training process in a GAN involves an iterative loop that resembles the artist-critic competition:

- Generator Produces Data: The generator network takes a random noise vector as input and utilizes its internal parameters to transform it into a new data sample. This sample could be an image, a piece of text, or any other data type the GAN is trained on.

- Discriminator Evaluates Data: Both real data samples and the generated samples from the generator are fed into the discriminator network.

- Loss Calculation: A loss function, such as binary cross-entropy, measures the discrepancy between the discriminator’s predictions (real or fake) and the actual labels.

- Network Updates: Based on the calculated loss, both networks update their internal parameters through a backpropagation process. The generator aims to minimize its loss by producing data that fools the discriminator, while the discriminator aims to maximize its loss by accurately classifying real and generated data.

This loop continues iteratively, with each network constantly learning and improving based on the other’s performance. As the generator becomes adept at creating realistic data, the discriminator is forced to refine its classification skills to maintain its ability to distinguish real from fake.

3. Architectural Variations:

While the core concept remains the same, different types of GANs exist with specific architectural variations:

- Vanilla GAN: This is the original GAN architecture, where the generator and discriminator directly compete as described above.

- Deep Convolutional GANs (DCGANs): These GANs utilize convolutional neural networks for both the generator and discriminator, making them particularly suitable for generating images. The convolutional layers allow them to capture the spatial relationships within the data, leading to more realistic image generation.

- Wasserstein GANs (WGANs): These GANs address potential instability issues that can occur in vanilla GANs by using Wasserstein distance as a loss function. This distance metric helps to ensure smoother training and convergence.

- CycleGANs: These GANs are specifically designed for image-to-image translation. They utilize two generators and two discriminators, allowing them to learn the mapping between two different image domains (e.g., horse to zebra).

4. Applications and Impact:

The potential applications of GANs are vast and constantly evolving. Here are some key areas where they are making significant contributions:

- Image Generation: GANs excel at creating photorealistic images of faces, landscapes, objects, and even entirely new scenes. This has applications in various fields, including art, design, and even medical imaging.

- Video Generation: Similar to image generation, GANs can be used to create realistic videos, including facial expressions, actions, and even entire scenes. This opens doors for applications in entertainment, education, and simulation.

- Image Editing: GANs can be used to manipulate and enhance existing images, enabling tasks like style transfer, inpainting (filling in missing parts of an image), and super-resolution (increasing image resolution).

- Text Generation: GANs can be trained on large text datasets to generate realistic and coherent text content, including poems, code, scripts, and even news articles.

- Data Augmentation: GANs can be used to artificially expand datasets by generating additional data samples that share the same characteristics as the original data. This is particularly useful in situations where labeled data is scarce.

5. Advantages and Considerations:

GANs offer several advantages over traditional generative models:

- High-Quality Data Generation: GANs have the potential to create incredibly realistic and diverse data samples that are often indistinguishable from real data.

- Versatility: GANs can be applied to various data types, including images, videos, and text, making them a highly versatile tool.

- Unsupervised Learning: Unlike many other generative models, GANs do not require large amounts of labeled data for training. This makes them suitable for situations where labeled data is limited.

However, implementing and utilizing GANs also comes with some considerations:

- Training Complexity: Training GANs can be a challenging process due to potential instability issues and the difficulty in achieving optimal performance. Careful selection of hyperparameters and loss functions is crucial.

- Black-Box Nature: Understanding the internal workings of GANs can be difficult, making it harder to interpret the generated data and ensure it is not biased or malicious.

- Computational Cost: Training GANs often requires significant computational resources due to the complex nature of the architecture and the iterative training process.

6. Future of GANs:

GAN research is a rapidly evolving field with new architectures and applications emerging constantly. Some promising areas of advancement include:

- Improved Stability and Control: Researchers are actively exploring ways to make GAN training more stable and controllable, leading to more predictable and reliable results.

- Interpretability: Efforts are underway to develop methods for better understanding the internal workings of GANs, allowing for greater transparency and control over the generated data.

- Novel Applications: As GAN technology continues to mature, we can expect to see even more innovative applications in various fields, pushing the boundaries of creative content generation and data manipulation.

In conclusion, Generative Adversarial Networks represent a powerful and versatile tool within the realm of deep learning. Their ability to create highly realistic data samples has opened doors to numerous applications across various domains. While challenges remain in terms of training complexity and interpretability, the future of GANs holds immense potential for further advancements and groundbreaking applications.